Some things in life are certain: death, taxes, getting a ‘sorry I missed you’ card from the postman when you’ve just nipped to the loo for 2 minutes. Oh, and having the conversation about the accuracy of infant schools’ KS1 results whenever you find yourself in the same room as a junior school headteacher. This is a conversation I have regularly. If I had a pound for each time I’ve had this conversation, I reckon I’d have about £87 by now, which is nearly enough for a new pair of climbing shoes. I always need new climbing shoes.

Sometime ago, a junior school head came to visit me in my office. She wanted to discuss the issue of KS1 data accuracy (obviously). I pushed my jar of pound coins towards her, strategically placed a climbing gear catalogue within line of sight, and prepared myself for some proper headteacher ranting. But this head didn’t want to rant; she wanted to take some action. She wanted to do stuff. She wanted data. Which is always nice.

So, after some discussion we hatched a plan: to carry out CAT tests on entry in as many Junior schools as possible. We had no idea if this project would be of any use and what we would do with the data when we got it but it sounded like positive action and we thought it would be pretty neat, too. In the end after numerous meetings and emails, 13 out of the 20 junior schools in Gloucestershire got involved and a date in early October was set for their new Year 3 intakes to do the tests. Exciting!

The test itself is known as a PreA test and is specifically designed to be carried out early in year 3. If you’d like to learn more about these and other CAT tests, please contact GL Assessment.

I said above that we didn’t know what we would do with the data, which is really true. I had a sort of, kind of idea. A CAT test provides scores for the pupils verbal, non-verbal and quantitative reasoning; it does not generate a level or sublevel that can be directly compared with the pupil’s KS1 results. However, like other CAT tests, the PreA test would provide an English and Maths estimate for the end of KS2 in the form of a sublevel. I thought it would be interesting to compare these estimates with those generated using RAISE VA methodology. Not exactly a perfect solution, but compelling, in a data-ery sort of way.

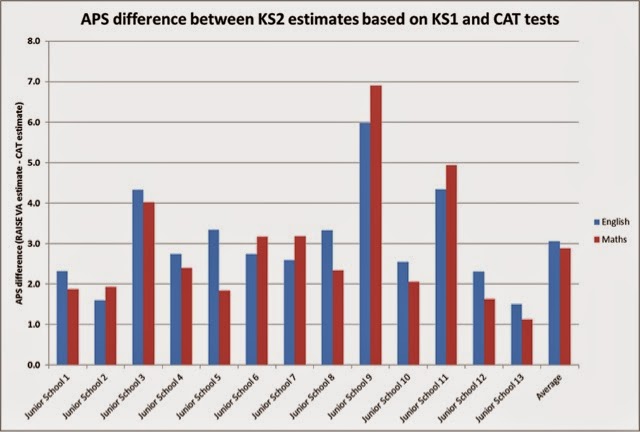

So, once the junior schools had carried out the PreA tests in October last year, they sent me the data. I then converted each pupil’s KS2 sublevel estimates generated by the tests, into points scores (by the way, I don’t like using the term ‘APS’ here because they’re not averages. I’m pedantic like that). Next I put each pupil’s KS1 results into my VA calculator (more information on that here) to generate end of KS2 estimates using RAISE VA methodology, and took estimated point scores for each pupil. I now had two point score estimates for the end of KS2 for each Y3 pupil in the 13 junior schools taking part: one based on the CAT PreA test; the other based on their KS1 results. Neat! now all I had to do was subtract the CAT estimate from the RAISE VA estimate (the former from the latter) to find which one was highest. Positive figures would indicate that the estimate derived from the CAT tests was in advance of those derived from KS1 results; negative figures would indicate the opposite. ‘So what?’ I hear you shout. Fair question, but bear in mind that it’s the RAISE VA estimate that the pupil’s progress is measured against (well, sort of, because, actually, their estimates won’t really be calculated until they’ve done their KS2 SATS, but we’re trying here, OK?). And if the RAISE VA estimate (i.e. that based on KS1) is always higher that the CAT estimate then this could be rather worrying as it may indicate that the future VA bar will be set unrealistically high for those pupils.

So what was the outcome?

Well, the estimates based on KS1 results were higher than the those based on the CAT test in pretty much every case. I’m writing this at home without the full dataset in front of me but we’re talking about approximately 600 pupils here. It was quite startling. Wanna see some data? Course you do.

|

Anyway, that’s the end of this blog. Hope it was useful, or at least interesting.

Oh, and by the way, I am now a governor of a junior school and now own new pair of climbing shoes.

Subscribe to receive email updates when new blog posts are published.