Reports of data’s death have been greatly exaggerated.

It’s over a year since Ofsted announced their intention to no longer take the schools’ internal tracking data into consideration during inspections. I wrote this post at the time, outlining my thoughts, and on balance I still think it’s a good idea: it should cause schools to re-evaluate the purpose of data and give them confidence to develop systems that are truly centred on teaching and learning rather than on accountability.

Evidently, we have a long way to go. The heavy industry of meaningless data production still thrives in many schools: concocted flights paths of quasi-levels and ‘working at’ grades linking one statutory assessment to another; neatly linear progression measures that give the illusion of pinpoint accuracy, and sprawling APP-style grids of learning objectives that conveniently auto-assess on a teacher’s behalf at the click of a button. All of this is a distraction, a huge waste of time, and is likely to have the opposite to the intended effect both in terms of learning and workload.

But now, with the eye of Sauron focussed on the depths of the curriculum, we can press the hard reset button on assessment data and build accurate, insightful and proportionate systems from the classroom upwards with the needs of teachers foremost. Get that right and the rest (in theory) should take care of itself.

It has to be said that many schools were doing this anyway, and they feel rightly put out that what they consider to be good evidence of learning – results of standardised tests or comparative judgement for example – will be completely disregarded in favour of book looks. Meaningful data becomes collateral damage in Ofsted’s fight against made-up numbers. With the new framework now in full swing, this issue continues, but those schools will no doubt continue with their approaches, confident that they have a positive impact on learning.

But it’s the position of other schools that is more concerning. Some may have interpreted Ofsted’s decision as a reason to put data on the back burner, to put off making changes to assessment practices, or to give up collecting data all together.

“Well, Ofsted aren’t going to look at it anymore, are they?”

And that speaks volumes about the way data is perceived in some schools: as a servant of accountability, a way to keep the wolf from the door. But data is useful. It helps teachers identify potential issues in order to better support pupils; it allows senior leaders to make informed decisions; and it provides governors with the tools they need to ask challenging questions. Ditching or neglecting data as a result of Ofsted’s decision to ignore it is missing the point.

But if we’re going let go of the comfort blanket of flight paths, quasi-levels, progress points, and endless tick lists of learning objectives, what’s left?

There’s a lot of useful data that can fill the void. Here are some examples:

Context

School systems hold a wealth of contextual information that’s of value to teachers.

- Mobility: number of school moves, time spent in each school and year joining current school. What are the reasons for mobility and how might achievement be affected?

- Attendance: are there any significant periods of absence? What were the reasons for these?

- Month of birth: age in year has an impact on attainment. The gaps close at each key stage but it’s still worth knowing who the youngest in the class are. Are these the pupils that need more support?

- SEND: an obvious one but who are the pupils with SEND, what are their specific needs and how have these changed over time? Are there year groups and classes with higher proportions of SEND than others? What additional support have pupils received? How successful was it?

- EAL: again seems obvious, but how much is known about the varying needs of EAL pupils, the support they have received and how effective it has been?

- Disadvantaged pupils: which pupils are or have been eligible for free school meals in the last 6 years? Are there any looked after children in the cohort? Whilst not definitive, deprivation can be an indicator of lower achievement.

- Overlap between groups: are there pupils that fall into two or more vulnerable groups and are therefore at greater risk of falling behind?

Some may question whether access to this information is helpful or if it increases assessment bias, and that is a conversation schools need to have. However, analysis shows that certain contextual factors, such as those outlined above, are strong indicators of under performance and it is therefore most likely beneficial if teachers are aware of them.

Results of statutory assessments

It is surprising how many teachers are unaware of pupils’ prior attainment and do not know where to find it, but knowledge of the results of statutory assessments is an important part of the jigsaw:

- EYFS: did all pupils reach a good level of development at the end of the reception year? If not, where were the gaps? Some pupils may have been emerging in one or two early learning goals whilst others may have been emerging in all of them. Did they narrowly miss them at the end of reception and meet them early in year one, or were they significantly below typical for their age? And of course some pupils may have exceeded some or all early learning goals. Does early years development correlate mainly with month of birth or are other factors at play?

- Phonics: did all pupils pass the phonics check? Did they pass it in year 1 or 2 or not at all? If they didn’t meet the standard, was it due to SEND or language or something else? Did they struggle on real or made -up words?

- KS1: did pupils meet expected standards in all subjects, or miss it in one, two or all? Were any pupils pre-key stage? Who achieved greater depth? What were the scaled scores in the reading and maths tests? Were there any discrepancies between test score and teacher assessment? For example, pupils that achieved a score of 100 or more but were assessed as working towards, or scored below 100 and yet met the expected standards. What were the reasons for these discrepancies? Is there any question level analysis (QLA) of KS1 tests that may point to areas of weakness? And of those pupils that fell short of expected standards, which ones may or may not catch up at KS2?

- KS2: Did all pupils sit tests? If not, why not? Were any assessed as pre-key stage? Of those pupils that didn’t meet expected standards, did they fall short in just one subject or all of them? Did pupils narrowly miss the threshold or fall a long way short? Were any pupils granted special consideration? Did any achieve high scores in tests or greater depth in writing? Are there discrepancies between the subjects, especially between writing, which is teacher assessed, and reading, maths and grammar, punctuation and spelling, which are based on tests? Have you accessed the QLA of KS2 tests for pupils in your class? How do answers compare to national averages at item, pupil and domain level? If you are secondary teacher, are you familiar with KS2 assessment and have you compared KS2 results of feeder schools? How do KS2 results compare to your own baseline assessment? You could also convert pupils’ KS2 scaled scores into percentile rank using table N2b here for ongoing tracking.

- In future years we will also have the results of the multiplication tables check, which may provide some useful information. We will not, however, have the results from the reception baseline, but schools can keep their own running tally of tasks completed correctly and this could be recorded for other teachers to access.

Internal assessment data

The stuff that Ofsted won’t look at, but that doesn’t mean it’s not useful information. It certainly should be, otherwise stop gathering it.

- Baseline assessment: schools often make their own assessment at the beginning of reception and year 7. This is highly likely to be of interest to teachers of other year groups.

- Teacher assessment: most teachers record an assessment each term in core subjects in primary schools, or in a specific subject in secondary schools. Assuming assessments are robust and free from distortion they will be of value to other teachers, and can be compared to results of previous assessments, or to a targets, for example those based on FFT50.

- Standardised scores: the results of standardised tests (eg NFER, GL) provide teachers with unbiased data that show where pupils sit within a large, representative national sample. Tracking standardised scores over time can therefore reveal if pupils are falling behind or improving in relation to other pupils nationally, and help us understand if pupils are on track to meet certain grades or standards. For those subjects without commercially available standardised tests, tests could be developed and standardised internally, especially in MATs with large numbers of pupils.

- QLA: marking a test will provide useful insight but many schools go further by making use of QLA tools. These will show how a pupils and cohorts have done compared to a national sample both at item and domain level. Unless the tests are online, QLA will probably involve entering hundreds of 1s and 0s onto a spreadsheet or similar. Schools need to decide whether the benefits justify the workload.

- Percentile rank: you can convert standardised and scaled scores into percentile rank to produce a common currency. You could then track pupils’ national percentile rank over time, or simply their change in rank in year from entry onwards (a zero sum game in aggregation but useful at individual pupil level). Again, MATs can utilise the power of their greater numbers to improve the reliability of percentiles.

- Comparative judgement: No More Marking is definitely worth investigating, especially if you are a primary school struggling with writing assessment. This will generate scaled scores, writing ages and percentages working at expected standards and greater depth. How do these compare to latest national figures, targets and results of previous assessments?

- Targets: if you use FFT, it is useful to be able to import estimates into your system so that all teachers, regardless of year, can access them. This can help improve understanding of how final results relate to prior attainment. Otherwise, set your own (if necessary, and I’m not convinced they are in primary schools). But if you do set targets, it helps if teachers can easily view them.

- Other types of data: raw scores from internal tests, reading ages, mock exam results, diagnostic screening, SEND and EAL specific assessments – there is a lot of data that can inform teachers and have impact.

Summary data

It’s inevitable that schools are going to have to report some data to someone somewhere at some point. External agencies, governors and MAT boards are the obvious examples, and for such audiences we require aggregated data presented in reports that summarise a school’s performance. Reporting requirements can be the driving force behind assessment and data collection in many schools and the risk of distortion is clear if the stakes are high. Another problem is that many schools are still using abstract progress metrics that satisfy the data demands of various bodies without really telling anyone anything useful. And finally, schools will be expected to break cohort data down into sub groups to show the performance of boys against girls, disadvantaged against non-disadvantaged pupils and so on. This is done in the belief it will help tease out issues and set priorities when really we are just looking at statistical noise. Group size, overlap between groups, and the effect of outliers all cause much of this group-level data to be of limited value. These issues of subjective, biased and distorted data; non-standardised measures; and statistical noise have all led Ofsted to take the decision to no longer take internal data into account but that’s not going to stop schools being required to report on current performance.

So, what can they report?

- Results of statutory assessments against national figures over time. This type of data is usually covered by ASP and FFT, although ASP doesn’t present a three year time series for anything other than KS2 and KS4 results, and FFT doesn’t deal with EYFSP and phonics data (except for using the former as a baseline for KS1 value added). Primary schools are crying out for a useful (and free!) summary report that presents all their statutory data in an accessible format (preferably published by the end of July)

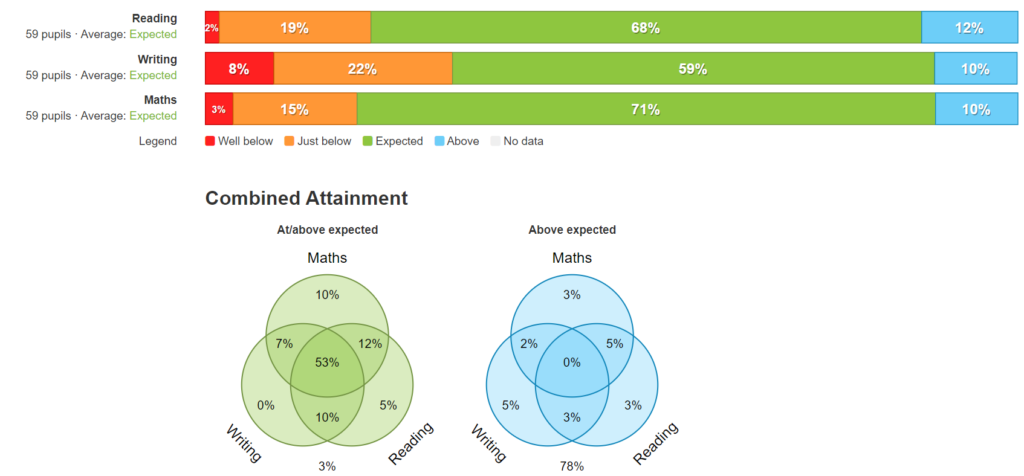

- Primary: the percentage of pupils in each year group at or above expectations (based on a mix of statutory, standardised and teacher assessment) in core subjects at key points (eg at KS1, end of last year, latest term) to convey (but not quantify) progress. Adding in a column that shows the KS2 FFT50 estimate for reference may also be useful. This can all be presented on a single page. For pupils that are working below expectations, it’s best to present data on a case-by-case basis, perhaps using a table showing individual context and assessment data over time (with names removed). If you have a large number of pupils working below, then group level data is perhaps unavoidable, but present it as numbers of pupils rather than percentages and averages (transition matrices do a good job of this). Governors – and anyone else looking at this data – should be asking what is being done to support these pupils and averages are not going to tell them anything.

- Secondary: KS4 data is inevitably going to focus on percentages of pupils on track to achieve certain grades compared to targets and to last year’s results, with scrutiny of those pupils that are off target. At KS3, who knows? But ‘working at’ or ‘working towards’ GCSE grades derived from some simplistic KS2-KS4 flightpath sketched on a beer mat should be avoided. As mentioned previously tracking national rank from KS2 tests is an option – eg. does the school have an increasing proportion of pupils in the top half nationally? – but this only really works where standardised tests are available (i.e. in English, maths and maybe science). In the absence of standardised tests, schools can use their own tests to monitor a pupil’s position in the cohort relative to other pupils, but aggregation will turn this into a zero sum game. MATs can use their greater numbers to standardise internal test scores, which may provide a better proxy of national position. If internal tests are to be graded, then a non-GCSE-style scale should be sought to avoid inference. A summary of effort grades may also be reported to governors. KS3 data is undeniably tricky.

Of course, all of this relies on simple, accessible, adaptable systems that are capable of storing and analysing any data in any format, and that allow quick and easy retrieval. It’s all very well having lots of useful information but if no one can find it, or if they have to go through a gatekeeper to access it, or if it’s not presented in a readable format then it’s not going to be particularly effective. Don’t compromise your approach to fit the rules of a system, don’t make things unnecessarily complicated, don’t invent abstract metrics, don’t increase data workload except where warranted by learning improvements, and don’t lock useful data away unless it needs to be locked away.

Foster a more open, equitable and proportionate data culture in your school and everyone will benefit.

Bad data is dead; long live good data.