I admit that it took a while for the penny to drop. Once I accepted, and then embraced the death of levels, I’ve been continually re-evaluating my thoughts on progress measures, and have had this nagging feeling for a while now: that our entire approach is not just flawed but is a fabrication, constructed to meet the ever increasing pressures of accountability. We have come to accept these measures, made them part of the common language of assessment and tracking even though they bear little or no relation to what happens in the classroom. Progress is actually an individual thing, occurring at different rates and dependent on numerous influencing factors yet we pretend it isn’t so we can continue to produce numbers for those that demand them, never really questioning their validity. We have blindly stumbled into complicity, becoming willing participants in a major scam, and perhaps architects of our own downfall. But now with levels gone I think it’s time we did a little soul searching and asked ourselves a rather important question:

Can we really measure progress?

I know we want to, and feel we need to, and are told we have to, but what if it’s nonsense? What if it’s all done for the benefit of Ofsted and performance management? What if we’ve been making it all up all along? Ask yourself this: what is the main reason for measuring progress? And how does it benefit teaching and learning?

Often during talks I’ll put up a slide which asks the simple question: ‘what is expected progress?’ Regardless of the audience – HTs, DHTs, teachers, governors or NQTs – the answers are the same: ‘2 levels’ and ‘3 points per year’ are the usual responses. Someone will then announce that ‘in our school 4 points is expected progress’. Sadly, no one ever says ‘it depends on the pupil’, or, more controversially, ‘I don’t think there is any such thing’. It seems that we have completely bought into this expected progress myth where all pupils apparently follow some kind of magic, universal gradient regardless of start point and whose progress can be broken down into convenient, regular-sized blocks of equal value.

And how did we arrive at this situation? First we accepted that, in the case of Key Stage 2, 2 levels was expected progress for all pupils because level 2 was the expected level at the end of Key Stage 1 and level 4 was the expected level at the end of Key Stage 2. We then accepted that this rule applies to any start point. Point scores were assigned to broad levels and to the ill-defined sublevels. 2 ‘whole’ levels equated to 12 points and 12 divided by 4 years equals 3 points per year, so that must be the annual rate of expected progress, right? And 4 is greater than 3 and 4×4 is 16 so that must be better than expected, right? Simple.

And of course 3 points didn’t fit neatly into sublevels so we end up with tracking of single ‘APS’ points, convincing ourselves that we know the difference between, say, a 3B and a 3B+. A single point of progress.

But what is a point?

Come to think of it, what is the point?

The point is to wring out as much progress as possible from the damp sponge of accountability.

These numbers were never about real progress. They do not in anyway relate to the progress pupils have actually made. Rather, they are the result of dividing a baseless figure by the number of years in a key stage. Our systems have been built around a concept of expected progress that is based on an arbitrary scale between two ill-defined, best fit and somewhat spurious end points. We were making the progress fit the numbers. Essentially we’ve been making it all up.

So let’s return to that question: what is expected progress? But now let’s consider it in a post-levels context. I recently tweeted the following:

This seems to have struck a chord yet inevitably these pupils will be categorised as having made ‘expected’ progress, possibly given an amber RAG rating, which these days is interpreted as ‘requiring improvement’. But if a pupil achieves pretty much everything expected of them by the end of one year, and does the same the next year, how can that be seen as anything other than good? How much does a pupil need to ‘master’ to stay on top of things, to remain at the ‘expected’ level?

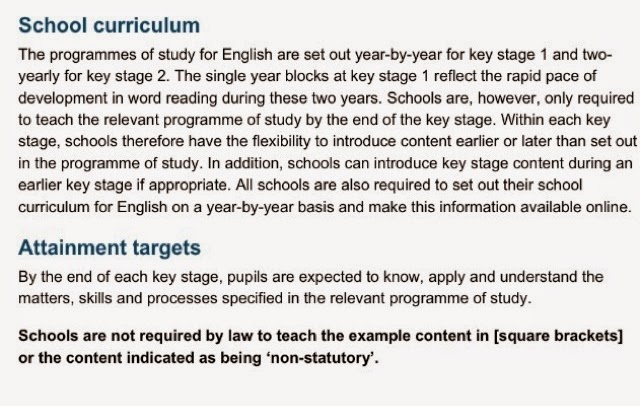

At this point I think some clarification is required. The following screen shot is taken from the latest programmes of study:

Learning is, of course, open ended and pupils are not limited to a specific list of objectives per year – this has become a bit of an urban myth – but in a curriculum of increased difficulty with emphasis on depth rather than pace of learning, many if not most pupils will find themselves constrained within the parameters of that year’s objectives, so how do we show better than expected progress? As I’ve blogged about here, many systems risk repeating the mistakes of the past in their approach to measuring progress in a post-levels world by equating ‘better than expected progress’ with accelerated pace through the curriculum. In other words, pupils will only be deemed to have made good progress if they cover more content than is expected. Systems are not really geared up to define good progress in terms of depth. And is depth something we can actually quantify with any degree of confidence anyway?

We will of course continue to attempt to measure progress, but I do not believe it can be done particularly effectively over short periods of a few weeks. Can we honestly quantify a change in such a short space of time? Or are we attempting to do it because we’re being told we have to?

And what about the long term, across key stage 2 for example? Is it fair or accurate to say that a pupil, progressing from being broadly in line with expectations at the end of KS1 to above expectations at KS2, has made the same progress as a pupil progressing from below expectations to meeting them; or made that many points more than a pupil remaining at the expected level; or done this much better than a pupil continuously below expectations? Any such interpretation relies on the assumption of progress being linear and following a universal gradient, which we are thankfully beginning to realise is not the case. When we tackle a problem, we never assume that it can be broken down into uniform blocks of equal difficulty – that’s not how problem solving works – so why have we built this assumption into progress measures?

And if progress isn’t linear, and ‘expected’ progress is individualized, then how can we compare pupils?

Some pupils will have difficulty grasping fundamental concepts but then accelerate once these become embedded. Others may acquire the fundamentals quickly only to plateau thereafter, struggling with the more advanced aspects. Some pupils will ‘master’ everything with ease whilst most will take longer to consolidate their learning and a few pupils never will. It clearly takes a lot more effort for some pupils to travel a short distance in the curriculum than it does for others to cover everything. In a traditional linear progress model, these pupils will be deemed to have made less progress than the high achievers, but is that right? In a linear model – a continuous point scale – a pupil who moves from being below age-related expectations to achieving objectives at the end of the year is deemed to have made the same amount of progress as a pupil progressing from age-related expectations to above. We have come to accept this as fact when in reality it’s grossly inaccurate. The progress made by these two pupils is different. The work is different. The effort is different. If we think about progress in terms of the effort required to acquire, consolidate and apply knowledge then it begins to take on a different meaning.

We may attempt to adapt our systems to allow for these variations in required effort by weighting objectives, assigning them different values based on perceived differences in difficulty. But there are problems here also – what is easy for one child will be difficult for another. If we asked the pupils themselves to weight the objectives in terms of their difficulty, would they all weight them in the same way?

Essentially, all we can do is teach the curriculum, state whether or not pupils have achieved objectives, and make a broad assessment of their position in relation to age-related expectations – below, at or above – at a given point in time. It may be possible to further subdivide such categories but only if they are useful and can be substantiated through pupils work. And as for progress measures, these need to be very carefully thought out. If we bow to pressure and devise data primarily for the purposes of accountability – to satisfy the needs of Ofsted, the LA and performance management – then we’ve lost the plot again and are barking up the wrong tree. We must resist the temptation to adhere to the orthodoxy of 4 points good; 3 points bad. That was never a tool for teaching, wasn’t rooted in any meaningful or accurate assessment, and harks back to one of the fundamental problems of levels: that we started with an arbitrary number and built an entire system around it.

So, when devising progress measures in future, ensure that you consider these two questions:

- Are they accurate?

- Do they have any impact on learning?

And be honest!

Further reading:

Russell Hobby’s blog on progress and school improvement:

Education data lab blog on linear progress: